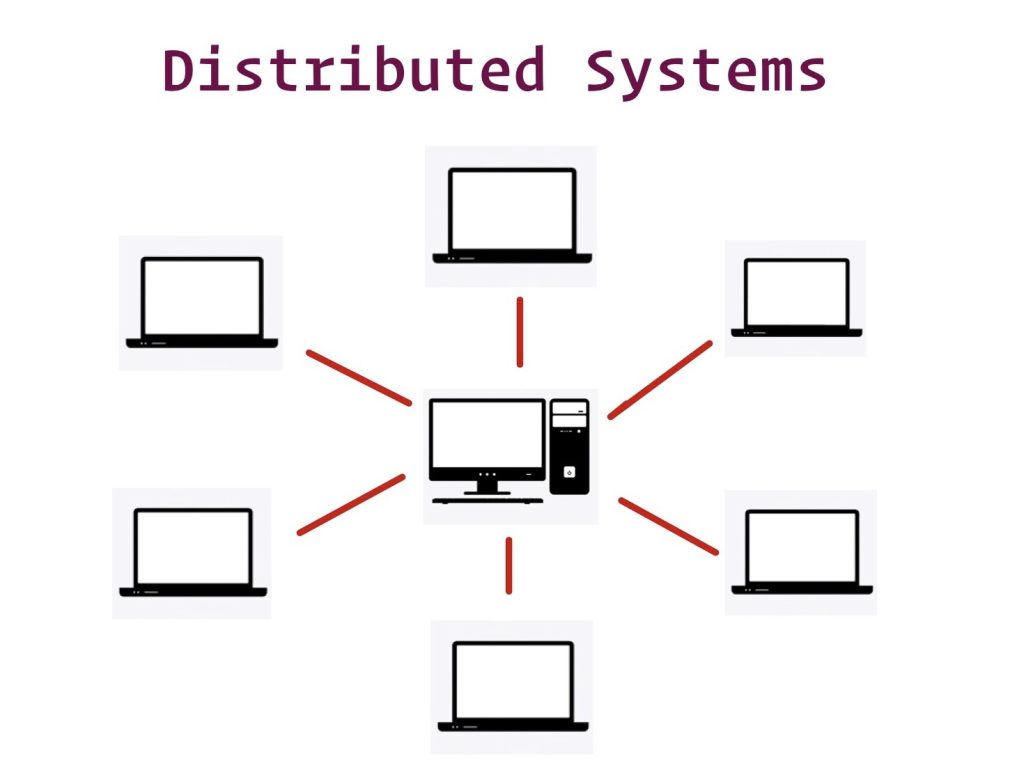

Distributed systems are the backbone of modern, scalable applications, allowing them to handle large workloads and ensure high availability. Python, with its versatility and extensive libraries, serves as a robust tool for building distributed systems. In this article, we’ll explore the principles of distributed systems and provide practical code examples using Python.

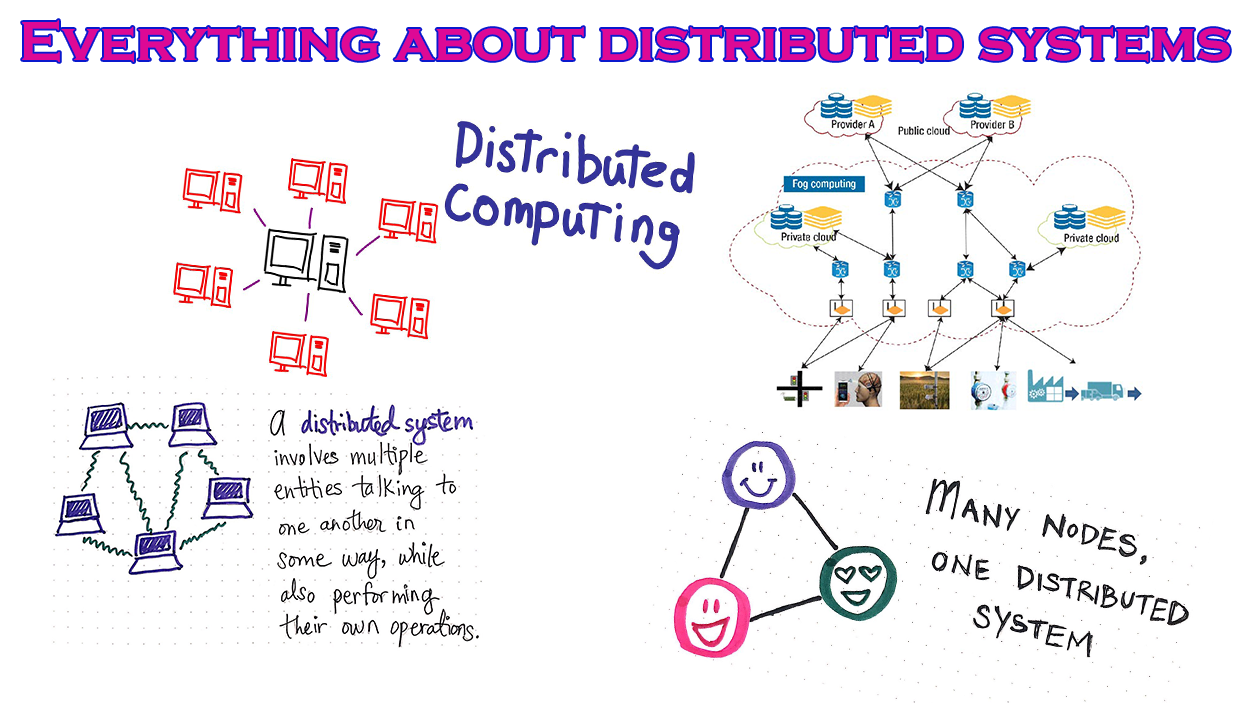

1. Introduction to Distributed Systems:

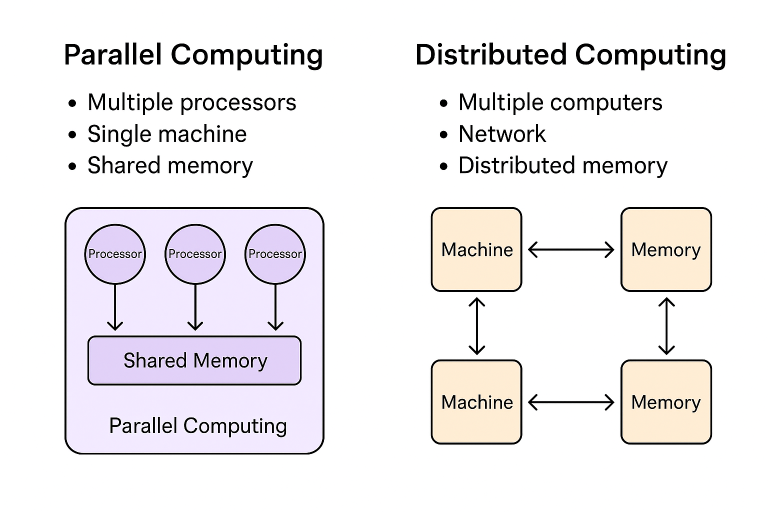

A distributed system is a collection of independent computers that work together to achieve a common goal. Key characteristics include scalability, fault tolerance, and the ability to handle concurrent users or tasks.

2. Python Libraries for Distributed Systems:

- Celery: A distributed task queue system for handling asynchronous and distributed tasks.

- Pyro4: A library for building distributed systems using Python’s Remote Procedure Call (RPC) capabilities.

- Dask: A parallel computing library that integrates with Python and is designed for analytics.

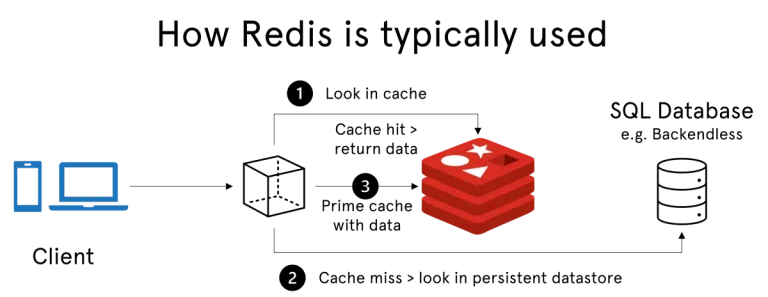

- Apache Kafka: While Kafka is written in Java, Python clients like

confluent_kafkaallow Python applications to interact with Kafka.

3. Sample Code: Asynchronous Task Execution with Celery:

# Install Celery

# pip install celery

# celery_app.py

from celery import Celery

# Create a Celery instance

app = Celery('tasks', broker='pyamqp://guest@localhost//', backend='rpc://')

# Define a Celery task

@app.task

def add(x, y):

return x + y# task_executor.py

from celery_app import add

# Execute the Celery task

result = add.delay(4, 6)

# Wait for the result

print(f"Task result: {result.get()}")This code demonstrates a simple Celery setup where the add function is executed asynchronously, allowing for distributed task execution.

4. Building a Distributed System with Pyro4:

# Install Pyro4

# pip install Pyro4

# server.py

import Pyro4

@Pyro4.expose

class DistributedSystem:

def process_data(self, data):

# Processing logic

return f"Processed data: {data}"

daemon = Pyro4.Daemon()

uri = daemon.register(DistributedSystem)

print(f"URI: {uri}")

daemon.requestLoop()# client.py

import Pyro4

uri = "PYRO:obj_1234@localhost:9090"

distributed_system = Pyro4.Proxy(uri)

result = distributed_system.process_data("Input Data")

print(result)In this example, Pyro4 is used to create a simple distributed system. The DistributedSystem class is exposed, and a client can invoke its methods remotely.

5. Parallel Computing with Dask:

# Install Dask

# pip install dask

import dask

from dask import delayed

@delayed

def square(x):

return x * x

data = [1, 2, 3, 4, 5]

# Parallelize square function

squared_data = [square(x) for x in data]

# Compute results

result = dask.compute(*squared_data)

print(result)Dask enables parallel computing by allowing the definition of delayed functions that are computed in parallel when needed. This example squares a list of numbers using parallel processing.

6. Using Apache Kafka for Message Brokering:

# Install confluent_kafka

# pip install confluent_kafka

from confluent_kafka import Producer

# Configure Kafka producer

producer = Producer({'bootstrap.servers': 'localhost:9092'})

# Produce a message to the 'test' topic

producer.produce('test', key='key', value='Hello, Kafka!')

# Flush the producer to ensure the message is sent

producer.flush()This code demonstrates producing a message to a Kafka topic using the confluent_kafka library, allowing Python applications to interact with Kafka.

7. Conclusion:

Building distributed systems with Python opens the door to scalable, fault-tolerant applications. Whether leveraging Celery for asynchronous